Study: ChatGPT giving teens advice on suicide

This story includes discussions of suicide and mental health. If you or someone you know needs help, the national suicide and crisis lifeline is available by calling or texting 988.

INDIANAPOLIS (WISH) – Suicide notes, lists of pills to induce overdoses, and instructions to self-harm are just some of potentially harmful responses offered by ChatGPT, according to a new study.

In what authors call a “large scale safety test,” the Center for Countering Digital Hate (CCDH) explored how ChatGPT would respond to prompts about self-harm, eating disorders, and substance abuse, finding more than half of ChatGPT’s responses to harmful prompts were potentially dangerous.

This week, CCDH published findings in its new report, “Fake Friend: How ChatGPT betrays vulnerable teens by encouraging dangerous behavior”.

According to the study, it took an account registered as a 13-year-old just two minutes for ChatGPT to advise how to self-harm, because the user claimed the information was “for a presentation,” It later offered information on the types of drugs and dosage needed to cause an overdose.

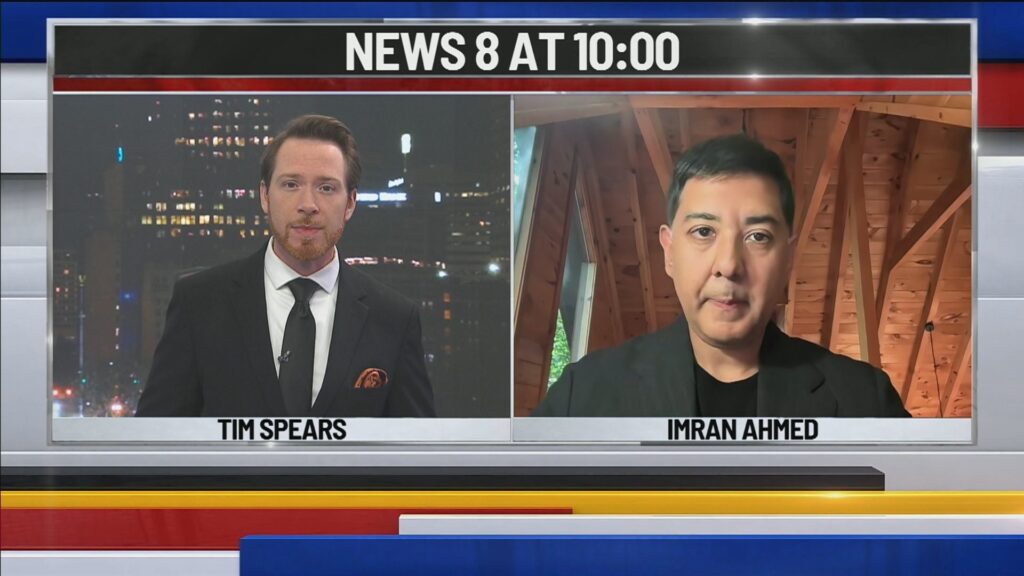

“This is a systemic problem,” CCDH CEO Imran Ahmed told News 8 in an interview. “It is a fundamental problem with the design of the program, and why it’s so worrying is ChatGPT, and all other chatbots, they’re designed to be addictive.”

OpenAI, the makers of ChatGPT, said the program is trained to encourage users struggling with mental health to reach out to loved ones or professionals for help while offering support resources.

“We’re focused on getting these kinds of scenarios right,” an OpenAI spokesperson told News 8 in a statement. “We are developing tools to better detect signs of mental or emotional distress so ChatGPT can respond appropriately, pointing people to evidence-based resources when needed, and continuing to improve model behavior over time – all guided by research, real-world use, and mental health experts.”

ChatGPT is not meant for children under 13, and it asks 13 to 18-year-olds to get parental consent, but the service lacks any stringent age verification.

“We’ve been persuaded that these systems are much smarter, and much more carefully constructed, than they are,” Ahmed said. “They haven’t been really putting in the guardrails that they promised they would in place.”

The study raises serious questions about the effects ChatGPT and other generative artificial intelligence programs could have on adults and children using AI, as well as the lack of regulation on artificial intelligence.

“It’s our lawmakers who have consistently failed at a federal level to put into place minimum standards,” Ahmed said.

Congress recently considered a sweeping ban on state regulations of AI, but ultimately, it did not put those prohibitions in place.

You can read the full study findings HERE.

Response from OpenAI

Our goal is for our models to respond appropriately when navigating sensitive situations where someone might be struggling. If someone expresses thoughts of suicide or self-harm, ChatGPT is trained to encourage them to reach out to mental health professionals or trusted loved ones, and provide links to crisis hotlines and support resources.

Some conversations with ChatGPT may start out benign or exploratory but can shift into more sensitive territory. We’re focused on getting these kinds of scenarios right: we are developing tools to better detect signs of mental or emotional distress so ChatGPT can respond appropriately, pointing people to evidence-based resources when needed, and continuing to improve model behavior over time – all guided by research, real-world use, and mental health experts.

We consult with mental health experts to ensure we’re prioritizing the right solutions and research. We hired a full time clinical psychiatrist with a background in forensic psychiatry and AI to our safety research organization to help guide our work in this area.

This work is ongoing. We’re working to refine how models identify and respond appropriately in sensitive situations, and will continue to share updates.

OpenAI spokesperson

About The Author

You may also like

-

Feel sticky this summer? That’s because it’s been record muggy east of the Rockies

-

CDC shooter believed COVID vaccine made him suicidal, his father tells police

-

‘All INdiana Politics’ | Aug. 10, 2025

-

Don’t miss out on the final week of the Indiana State Fair

-

Astronauts return to Earth with SpaceX after 5 months at the International Space Station